There are various reasons that organizations are preferring Microservices over monolithic applications.

But do we still face challenges in Microservice architecture? Yes!

What are the challenges we face while creating Microservice applications? Why do we need a dedicated layer for Microservices communication?

When we move from monolithic to Microservice architecture we are introduced to a couple of challenges that are not applicable in monolithic applications.

For example, an online shop application that uses multiple Microservices during its process, such as web services, payment logic that accesses payment gateway, shopping cart, inventory, DB, and many more microservices,

Challenges in Microservices:

If you are using Kubernetes these Microservices are deployed into Kubernetes clusters. Each microservice has its business logic in their containers but when the customer access the applications through a web server these Microservices needs a proper communication between them.

There are few things that are manually done by the application designers for Microservices, such as

1) Microservices needs to communicate between them each of them requires endpoints for communication. This is additional work for the developers to check the endpoints for the working of the application.

2) A microservice environment has firewall rules set up for the Kubernetes cluster or contains a proxy as an entry point to the cluster.

Once the request is inside the cluster the communication gets insecure. Microservices talk to each other freely. Any service can talk to each other, so there are no restrictions on that. For personalized applications with a higher level of security like banking data, etc security is important.

Again additional configuration inside the additional services is needed to secure the services, so the developers need to give special attention to security setup for Microservices applications.

3) Microservices creates robust applications. If one microservice loses the connection a retry logic is applied to reconnect the service.

Developers needed to add the retry logic to the services. Again an additional effort for testers and developers to add the logic and test them in the testing and production environment.

4) Services require monitoring for their performance. HTTPS errors, number of requests, receiving or sending a request, and how long it takes to identify the bottlenecks of your application. Developers need to add monitoring logic or use Permeates and tracing like libraries to and collect tracing data using the tracing library.

So overall additional code and logic to handle the challenges that are related to non-business logic. This increases the complexity of services instead of keeping them simple and lightweight.

Service Mesh:

Is it not better if we extract all the non-business logic into a layer?

Service Mesh extracts all the non-business logic of microservices, into its sidecar application. This handles all the logic and acts as a proxy. A third party logic that handles this networking logic, third-party applications, and configures operation and configuration. This allows the developers to focus on developing the features and business logic instead of non-business logic.

Let’s see how Service Mesh works in favor of developers.

1) Communication between Microservices:

Service mesh has a control plan that automatically injects the proxy, in every Microservices during production. Now microservices communicate using this proxy.

2) Traffic splitting:

This is an additional benefit of using service mesh. When changes are made in microservice for eg: a new version v2.0 of the payment system is built and deployed in a production environment. This version requires validations and new test coverage for identifying the bugs and product owners are not confident of new versions in production.

If you are using service mesh, you can divert the10% or 1% of traffic to the v2.0 to make sure it works, and the other 90% of traffic to payment service v1.0.

Istio Architecture:

By definition “ Istio is a service mesh, a popular solution that manages the communication between individual microservices that makes up the could-native applications”.

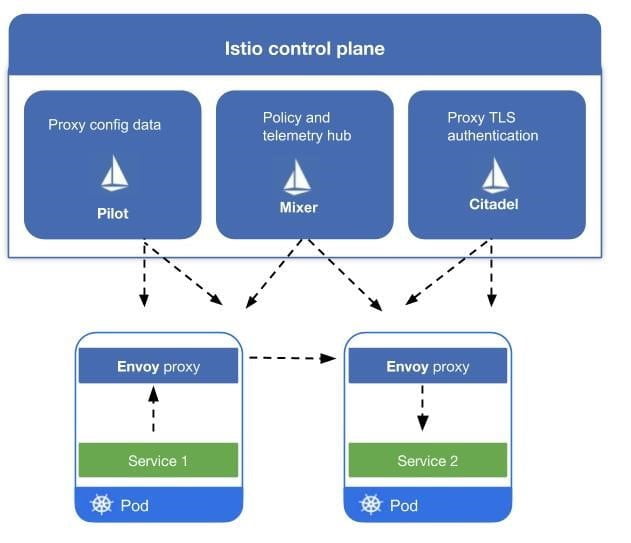

A service mesh is a pattern or a paradigm and Istio is one of its implementations. It contains envoy proxies of open source project, used during implementing service mesh architecture. Control panel component “Istiod”, manages several components, like Pilot, Gallery, Citadel, Mixer component. These component manages, add configuration, discover the Microservices and certifies them.

Configure Istio:

So now we have an implementation that reduces the non-development tasks of developers.

But how to configure all these features for our microservices application?

Istio configuration is separate from the application configuration. Istio is configured with Kubernetes YAML files because it uses CRD’s by extending cognates API.

What is CRD? CRD is a custom resource definition in Kubernetes, that is used to configure third-party technologies like Istio, Prometheus, it can be used like native Kubernetes objects.

Istio Service features:

These are the features of Istio for Mircorservice applications.

- Configuration discovery:

Istiod has the central services for all the microservices. Instead of statically configuring the endpoints, for each and new microservice, it automatically registers in the service registry.

- Service Discovery:

Istiod detects services and endpoints in the clusters. Using these service registries, proxies can now carry the endpoints to send their traffic to relevant services.

- Certificate authority:

It generates certificates for the microservices in the clusters. This ensures secure TLS communication between proxies of microservices.

- Metrix and tracing data:

Istiod gets the metrics and tracking data from the proxies, which is gathered from telemetric data from monitoring parties like Prometheus, or tracing servers, etc. This is an out-of-the-box, tracing, and matrix data for all microservice applications.

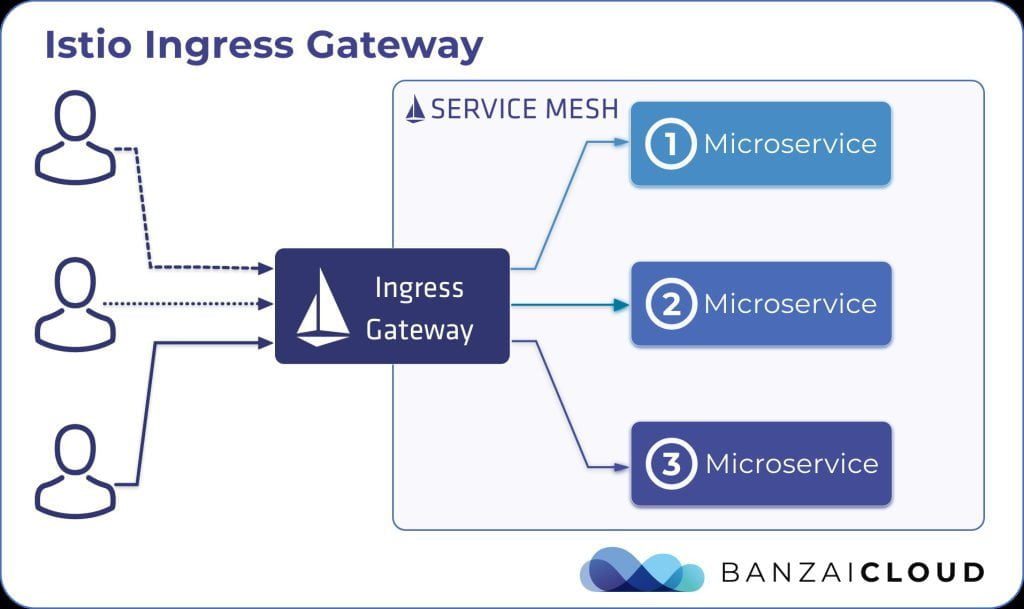

- Istio Ingress Gateway:

This is the entry point to the community cluster, Istio Ingress gateway is an alternative to the Nginx Ingress controller. Istio gateway runs a pod into the gateway cluster and acts as a load balancer by accepting incoming traffic entering your cluster and gateway direct traffic to one of your microservices, inside the clusters using virtual service components. This is configured using Istio gateway using Gateway CRD.

- Traffic flow with Istiod:

The user initiates the request with a web server, it firsts hits the gateway, as it’s the entry point of the cluster. The gateway evaluates the virtual service rules and destination rule before communicating with proxies.

TakeAway:

Istio is gaining momentum for Microservice applications. It not only reduced the developer non-development tasks but also helps in traffic flow and matrix development for further analysis.